20. Uncertainty and sensitivity#

20.1. Monte Carlo uncertainty analysis#

20.1.1. Model objects#

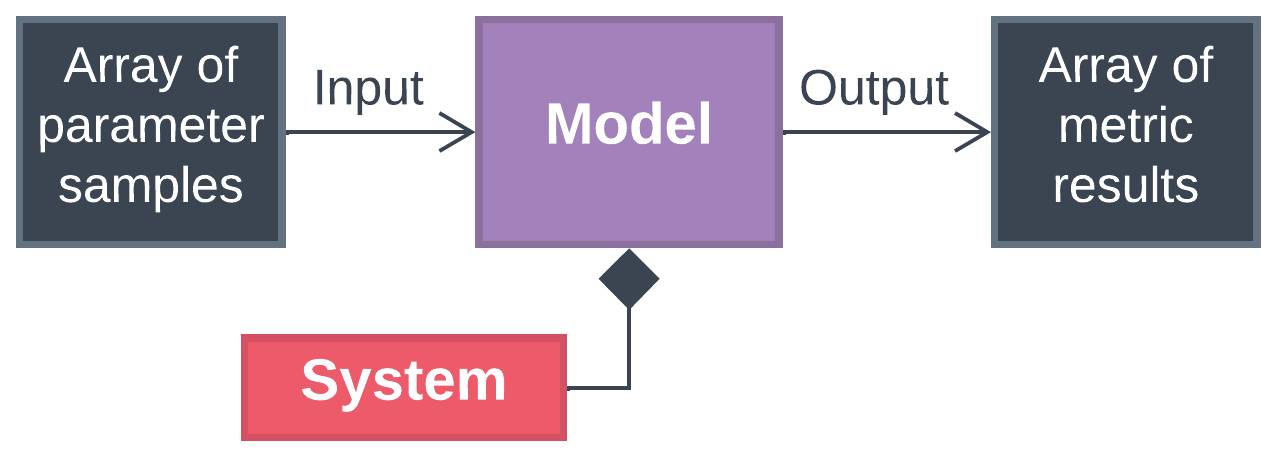

BioSTEAM streamlines uncertainty analysis with an object-oriented framework where a Model object samples from parameter distributions and reevaluates biorefinery metrics at each new condition. In essence, a Model object sets parameter values, simulates the biorefinery system, and evaluates metrics across an array of samples.

Model objects are able to cut down simulation time by sorting the samples to minimize perturbations to the system between simulations. This decreases the number of iterations required to solve recycle systems. The following examples show how Model objects can be used.

20.1.2. Create parameter distributions#

Let’s first learn how to create common parameter distributions using chaospy.

A triangular distribution is typically used when the parameter is uncertain within given limits, but is heuristically known to take a particular value. Create a triangular distribution:

[1]:

from warnings import filterwarnings; filterwarnings('ignore')

from chaospy import distributions as shape

lower_bound = 0

most_probable = 0.5

upper_bound = 1

triang = shape.Triangle(lower_bound, most_probable, upper_bound)

print(triang)

Triangle(0, 0.5, 1)

A uniform distribution is used when the theoretical limits of the parameter is known, but no information is available to discern which values are more probable. Create a uniform distribution:

[2]:

from chaospy import distributions as shape

lower_bound = 0

upper_bound = 1

unif = shape.Uniform(lower_bound, upper_bound)

print(unif)

Uniform()

A large set of distributions are available through chaospy, but generally triangular and uniform distributions are the most widely used to describe the uncertainty of parameters in Monte Carlo analyses.

20.1.3. Parameter objects#

Parameter objects are simply structures BioSTEAM uses to manage parameter values and distributions.

This section is just to get you familiar with Parameter objects. All the fields that a Parameter object can have are described below. Don’t worry if you don’t fully understand what each field does. The main idea is that we need to define the setter function that the Parameter object uses to set the parameter value to the element (e.g. unit operation, stream, etc.) it pertains to. We can also pass a distribution (i.e. a chaospy distribution) that will be accessible for Model objects

to sample from. As for the name, units of measure, and the baseline value, these are all for bookkeeping purposes. BioSTEAM incorporates the name and units of measure when creating a DataFrame of Monte Carlo results and parameter distributions. Parameter objects are created by Model objects which implicitly pass both the system affected by the parameter, and the simulate function. But don’t worry about these last two fields, they are automatically added by the Model

object when creating the parameter.

simulate: [function] Should simulate parameter effects.

system: [System] System associated to parameter.

name: [str] Name of parameter.

units: [str] Units of measure.

baseline: [float] Baseline value of parameter.

element: [object] Element associated to parameter.

setter: [function] Should set the parameter.

distribution: [chaospy.Dist] Parameter distribution.

Hopefully things will be become clearer as we start to create the parameter objects in the following sections…

20.1.4. Create a model object#

Model objects are used to evaluate metrics around multiple parameters of a system.

Create a Model object of the sugarcane biorefinery with internal rate of return and utility cost as metrics:

[3]:

from biorefineries import sugarcane as sc

import biosteam as bst

sc.load()

solve_IRR = sc.tea.solve_IRR

total_utility_cost = lambda: sc.tea.utility_cost / 10**6 # In 10^6 USD/yr

metrics = (bst.Metric('Internal rate of return', sc.tea.solve_IRR, '%'),

bst.Metric('Utility cost', total_utility_cost, '10^6 USD/yr'))

model = bst.Model(sc.sys, metrics)

The Model object begins with no parameters:

[4]:

model

Model:

(No parameters)

metrics: Internal rate of return [%]

Utility cost [10^6 USD/yr]

20.1.5. Add cost parameters#

A cost parameter changes cost requirements but not affect mass and energy balances.

Add number of fermentation reactors as a “cost” parameter:

[5]:

R301 = bst.main_flowsheet.unit.R301 # The Fermentation Unit

@model.parameter(

name='Number of reactors',

element=R301, kind='cost',

distribution=shape.Uniform(4, 10),

hook=lambda N: int(round(N)) # Make sure value is an integer

)

def set_N_reactors(N):

R301.N = N

The decorator uses the function to create a Parameter object and add it to the model:

[6]:

parameters = model.get_parameters()

parameters

[6]:

(<Parameter: [Fermentation-R301] Number of reactors>,)

Calling a Parameter object will update the parameter and results:

[7]:

set_N_reactors_parameter = parameters[0]

set_N_reactors_parameter(5)

print(f'Puchase cost at 5 reactors: ${R301.purchase_cost:,.0f}')

set_N_reactors_parameter(8)

print(f'Puchase cost at 8 reactors: ${R301.purchase_cost:,.0f}')

Puchase cost at 5 reactors: $2,623,357

Puchase cost at 8 reactors: $3,137,039

Add the fermentation unit base cost as a “cost” parameter with a triangular distribution:

[8]:

reactors_cost_coefficients = R301.cost_items['Reactors']

mid = reactors_cost_coefficients.n # Most probable at baseline value

lb = mid - 0.1 # Minimum

ub = mid + 0.1 # Maximum

@model.parameter(element=R301, kind='cost',

distribution=shape.Triangle(lb, mid, ub))

def set_exponential_cost_coefficient(exponential_cost_coefficient):

reactors_cost_coefficients.n = exponential_cost_coefficient

Note that if the name was not defined, it defaults to the setter’s signature:

[9]:

model.get_parameters()

[9]:

(<Parameter: [Fermentation-R301] Number of reactors>,

<Parameter: [Fermentation-R301] Exponential cost coefficient>)

20.1.6. Add isolated parameters#

An isolated parameter does not affect Unit objects in any way.

Add feedstock price as a “isolated” parameter:

[10]:

feedstock = sc.sugarcane # The feedstock stream

lb = feedstock.price * 0.9 # Minimum price

ub = feedstock.price * 1.1 # Maximum price

@model.parameter(element=feedstock, kind='isolated', units='USD/kg',

distribution=shape.Uniform(lb, ub))

def set_feed_price(feedstock_price):

feedstock.price = feedstock_price

20.1.7. Add coupled parameters#

A coupled parameter affects mass and energy balances of the system.

Add fermentation efficiency as a “coupled” parameter:

[11]:

@model.parameter(element=R301, kind='coupled',

distribution=shape.Triangle(0.85, 0.90, 0.95))

def set_fermentation_efficiency(efficiency):

R301.efficiency = efficiency

Add crushing capacity as a “coupled” parameter:

[12]:

lb = feedstock.F_mass * 0.9

ub = feedstock.F_mass * 1.1

@model.parameter(element=feedstock, kind='coupled',

distribution=shape.Uniform(lb, ub))

def set_crushing_capacity(capacity):

feedstock.F_mass = capacity

20.1.8. Evaluate metric given a sample#

The model can be called to evaluate a sample of parameters.

Note that all parameters are stored in the model in the same order they were added:

[13]:

model

Model:

parameters: Fermentation-R301 - Number of reactors

Fermentation-R301 - Exponential cost coefficient

Stream-Sugarcane - Feedstock price [USD/kg]

Fermentation-R301 - Efficiency

Stream-Sugarcane - Capacity

metrics: Internal rate of return [%]

Utility cost [10^6 USD/yr]

Get dictionary that contain DataFrame objects of parameter distributions:

[14]:

df_dct = model.get_distribution_summary()

df_dct['Uniform']

[14]:

| Element | Name | Units | Shape | lower | upper | |

|---|---|---|---|---|---|---|

| 0 | Fermentation-R301 | Number of reactors | Uniform | 4 | 10 | |

| 1 | Stream-Sugarcane | Feedstock price | USD/kg | Uniform | 0.0311 | 0.038 |

| 2 | Stream-Sugarcane | Capacity | Uniform | 3e+05 | 3.67e+05 |

[15]:

df_dct['Triangle']

[15]:

| Element | Name | Units | Shape | lower | midpoint | upper | |

|---|---|---|---|---|---|---|---|

| 0 | Fermentation-R301 | Exponential cost coefficient | Triangle | 0.4 | 0.5 | 0.6 | |

| 1 | Fermentation-R301 | Efficiency | Triangle | 0.85 | 0.9 | 0.95 |

Evaluate sample:

[16]:

model([8, 100000, 0.040, 0.85, feedstock.F_mass]) # Returns metrics (IRR and utility cost)

[16]:

- Internal rate of return [%] 0.0874

Utility cost [10^6 USD/yr] -18.2

dtype: float64

20.1.9. Monte Carlo#

Sample from a joint distribution, and simulate samples:

[17]:

import numpy as np

N_samples = 200

rule = 'L' # For Latin-Hypercube sampling

np.random.seed(1234) # For consistent results

samples = model.sample(N_samples, rule)

model.load_samples(samples)

model.evaluate(

notify=50 # Also print elapsed time after 50 simulations

)

[50] Elapsed time: 9 sec

[100] Elapsed time: 17 sec

[150] Elapsed time: 26 sec

[200] Elapsed time: 35 sec

Although the system uses the last solution as an initial guess for the next, each scenario may be vastly different and there is no guarantee that this initial guess would be any better. Luckily, BioSTEAM can minimize perturbations to the system between simulations by pre-sorting the scenarios as well as use convergence data from past simulations to make better starting guesses for accelerated recycle convergence:

[18]:

model.load_samples(

samples,

optimize=True # Optimize simulation order

)

convergence_model = bst.ConvergenceModel(

# Recycle loop prediction will be based on model parameters

predictors=model.parameters,

)

model.evaluate(

notify=50, # Also print elapsed time after 100 simulations

convergence_model=convergence_model,

)

[50] Elapsed time: 9 sec

[100] Elapsed time: 17 sec

[150] Elapsed time: 25 sec

[200] Elapsed time: 32 sec

Notice how it took less time (~%5 less) to run the Monte Carlo simulations after optimizing the simulation order and/or predicting recycle loop convergence.

All data from simulation is stored in <Model>.table:

[19]:

model.table # All evaluations are stored as a pandas DataFrame

[19]:

| Element | Fermentation-R301 | Stream-Sugarcane | Fermentation-R301 | Stream-Sugarcane | - | ||

|---|---|---|---|---|---|---|---|

| Feature | Number of reactors | Exponential cost coefficient | Feedstock price [USD/kg] | Efficiency | Capacity | Internal rate of return [%] | Utility cost [10^6 USD/yr] |

| 0 | 4 | 0.491 | 0.0361 | 0.906 | 3.4e+05 | 0.13 | -18.3 |

| 1 | 4 | 0.52 | 0.0316 | 0.922 | 3.07e+05 | 0.155 | -16.4 |

| 2 | 5 | 0.55 | 0.0362 | 0.903 | 3.11e+05 | 0.121 | -16.8 |

| 3 | 7 | 0.475 | 0.0375 | 0.908 | 3.64e+05 | 0.128 | -19.6 |

| 4 | 7 | 0.506 | 0.0346 | 0.894 | 3.41e+05 | 0.136 | -18.5 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 195 | 4 | 0.573 | 0.0341 | 0.9 | 3.22e+05 | 0.139 | -17.3 |

| 196 | 5 | 0.465 | 0.0325 | 0.939 | 3.25e+05 | 0.16 | -17.3 |

| 197 | 9 | 0.484 | 0.0358 | 0.896 | 3.14e+05 | 0.124 | -16.9 |

| 198 | 8 | 0.459 | 0.0341 | 0.877 | 3.62e+05 | 0.136 | -19.7 |

| 199 | 5 | 0.535 | 0.0334 | 0.886 | 3.35e+05 | 0.14 | -18.1 |

200 rows × 7 columns

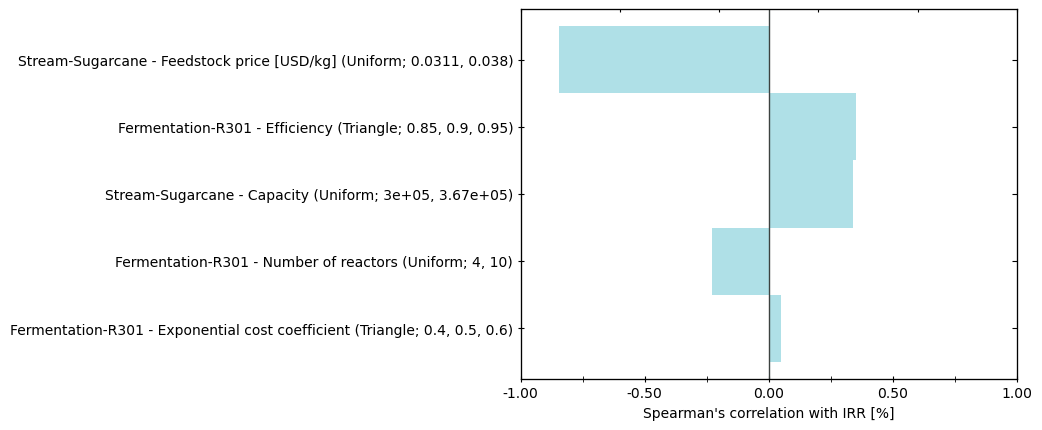

20.2. Sensitivity with Spearman’s rank order correlation#

Model objects also presents methods for sensitivity analysis such as Spearman’s correlation, a measure of monotonicity between variables:

[20]:

df_rho, df_p = model.spearman_r()

print(df_rho['-', 'Internal rate of return [%]'])

Element Parameter

Fermentation-R301 Number of reactors -0.228

Exponential cost coefficient 0.0475

Stream-Sugarcane Feedstock price [USD/kg] -0.847

Fermentation-R301 Efficiency 0.352

Stream-Sugarcane Capacity 0.338

Name: (-, Internal rate of return [%]), dtype: float64

Create a tornado plot of Spearman’s correlation between all parameters and IRR:

[21]:

bst.plots.plot_spearman_1d(df_rho['-', 'Internal rate of return [%]'],

index=[i.describe() for i in model.parameters],

name='IRR [%]')

[21]:

(<Figure size 640x480 with 3 Axes>,

<Axes: xlabel="Spearman's correlation with IRR [%]">)

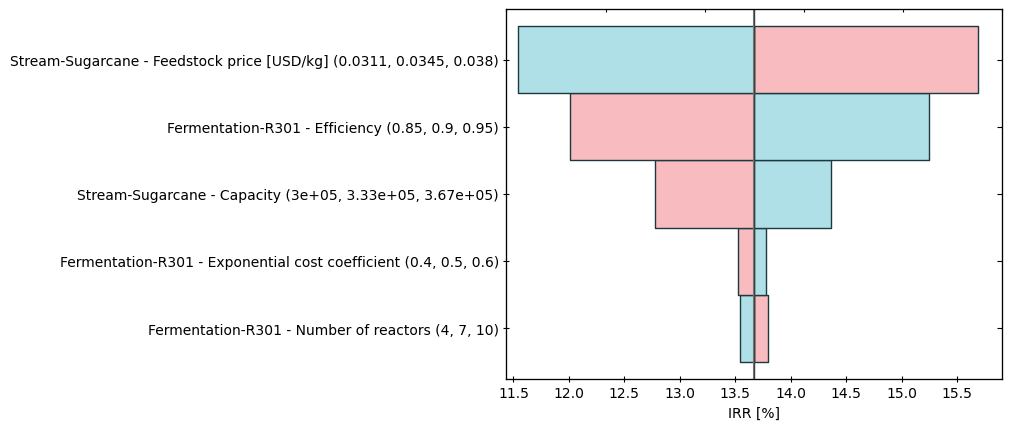

20.3. Single point sensitivity#

A quick way to evaluate sentivity is through single point sensitivity analysis, whereby a metric is evaluated at the baseline and at the lower and upper limits of each parameter. This method ignores the interactions between parameters and their distributions, but can help screen whether a system is sensitive to a given parameter. Model objects also facilitate this analysis:

[22]:

baseline, lower, upper = model.single_point_sensitivity()

print('BASELINE')

print('--------')

print(baseline)

print()

print('LOWER')

print('-----')

print(lower)

print()

print('UPPER')

print('-----')

print(upper)

BASELINE

--------

Element Feature

- Internal rate of return [%] 0.137

Utility cost [10^6 USD/yr] -17.9

dtype: float64

LOWER

-----

Element -

Feature Internal rate of return [%] Utility cost [10^6 USD/yr]

Element Feature

Fermentation-R301 Number of reactors 0.138 -17.9

Exponential cost coefficient 0.135 -17.9

Stream-Sugarcane Feedstock price [USD/kg] 0.157 -17.9

Fermentation-R301 Efficiency 0.12 -18.2

Stream-Sugarcane Capacity 0.128 -16.1

UPPER

-----

Element -

Feature Internal rate of return [%] Utility cost [10^6 USD/yr]

Element Feature

Fermentation-R301 Number of reactors 0.135 -17.9

Exponential cost coefficient 0.138 -17.9

Stream-Sugarcane Feedstock price [USD/kg] 0.115 -17.9

Fermentation-R301 Efficiency 0.152 -17.7

Stream-Sugarcane Capacity 0.144 -19.8

Create a tornado plot of the lower and upper values of the IRR:

[23]:

IRR, utility_cost = model.metrics

metric_index = IRR.index

index = [i.describe(distribution=False) # Instead of displaying distribution, it displays lower, baseline, and upper values

for i in model.parameters]

bst.plots.plot_single_point_sensitivity(100 * baseline[metric_index],

100 * lower[metric_index],

100 * upper[metric_index],

name='IRR [%]',

index=index)

[23]:

(<Figure size 640x480 with 3 Axes>, <Axes: xlabel='IRR [%]'>)

Note that blue represents the upper limit while red the lower limit.